[Could not find the bibliography file(s)

I wrote a post a while ago with my tips for people that are starting off a physics degree. Just after I published it a friend and fellow post graduate, Sam Cooper, pointed out to me that I made a fairly blaring omission in my summary of undergraduate physics. Vectors. I was going to sneak in an extra section but I had a think and figured that it actually deserved its own post, so here goes.

If you’ve done A-level physics I’m sure you’ve come across the idea of a scalar before. Scalars are essentially just numbers that are used to represent quantities. They are only able to represent the magnitude of a measure, or put simply how much of something there is. Vectors are mathematical objects that allow you to represent not only the amount of something there is, but the direction in which this measure is acting as well. These are incredibly useful and as a result of this they are used extensively throughout physics. In an undergraduate degree the most applicable use of vectors are most likely going to be in vector mechanics and electromagnetism. I’ll go through some applications at the end, but first some theory.

Vector Algebra

Most people are used to manipulating scalars in the form of algebra, but when we’re using vectors we need some slightly different rules for manipulating mathematical expressions. This is the field of vector algebra. Because of these different rules it’s common for vectors to symbolised differently in equations. The problem is that there isn’t a globally consistent way of doing it. In some texts vectors are represented by emboldening the symbols, such as ![]() . Another way, and the way which I shall use in this post, is by placing an arrow across the top of the symbol

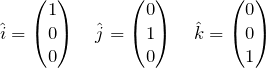

. Another way, and the way which I shall use in this post, is by placing an arrow across the top of the symbol ![]() . Just for the sake of formality, I’m going to be working in a 3 dimensional Euclidean space, the basis vectors of which consist of 3 orthonormal components. This may sound difficult to understand, so it’s worth explaining what this actually means. An orthonormal basis means that you build your vectors from basis vectors of unit length in that space and that none of the basis vectors can be projected into any of the others; they are all at right angles to one another. The basis vectors of Euclidean space are generally represented as

. Just for the sake of formality, I’m going to be working in a 3 dimensional Euclidean space, the basis vectors of which consist of 3 orthonormal components. This may sound difficult to understand, so it’s worth explaining what this actually means. An orthonormal basis means that you build your vectors from basis vectors of unit length in that space and that none of the basis vectors can be projected into any of the others; they are all at right angles to one another. The basis vectors of Euclidean space are generally represented as ![]() .

. ![]() and

and ![]() . These vectors lie along the x, y and z axes respectively. They can be represented in vector notation like so.

. These vectors lie along the x, y and z axes respectively. They can be represented in vector notation like so.

(1)

Now that we have correctly defined the basis, we can begin to make up our own vectors from these basis vectors. We do this by writing down how much of each basis vector we want. So let’s say for example we have a point, which we shall call ![]() , which is at

, which is at ![]() ,

, ![]() and

and ![]() . To express where this point is in 3D space we need to add together the right combination of basis vectors. We know that the basis vector

. To express where this point is in 3D space we need to add together the right combination of basis vectors. We know that the basis vector ![]() is in the positive

is in the positive ![]() direction and is a unit vector, so it represents +1 in the

direction and is a unit vector, so it represents +1 in the ![]() direction. The same reasoning goes for

direction. The same reasoning goes for ![]() in the

in the ![]() direction and

direction and ![]() in the

in the ![]() direction. This means that we can represent the vector

direction. This means that we can represent the vector ![]() like so:

like so:

(2) ![]()

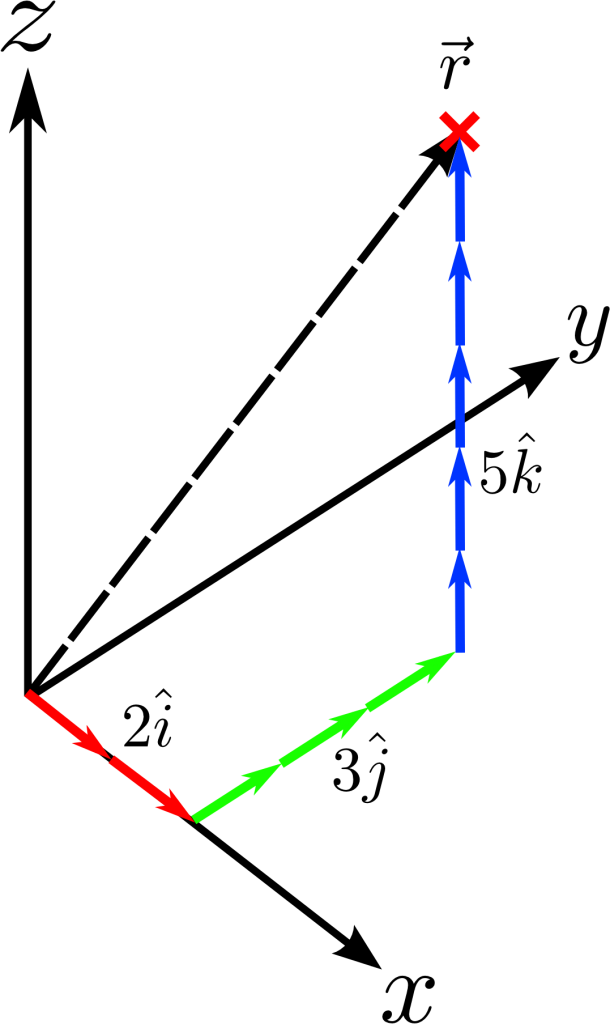

This vector can be illustrated schematically like so:

Vectors are made up from the basis vectors of a given space. In this example the vector ![]() is made up of

is made up of ![]() (red),

(red), ![]() (green) and

(green) and ![]() (blue). The black dashed line shows the resultant vector.

(blue). The black dashed line shows the resultant vector.

It can be a bit cumbersome using this notation all the way through the problem, so there’s an easy alternative. If we assume that all the vectors in a given problem use this same set of basis vectors we can also represent in short hand like so:

(3)

The same basis is necessary as in this notation there is nothing explicitly stating the basis. If in doubt just revert back to the long hand notation, shown in equation 2, as it leaves little room for ambiguity.

Vector Addition and Subtraction

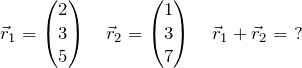

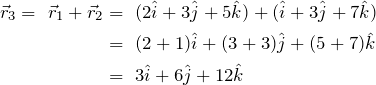

Now that we have established the structure of a vector we can start to work with them. The first operation you should know, as with normal algebra, is addition and subtraction. Let’s say we have two vectors and we want to add them together:

(4)

What we are essentially going to do here is once again decompose these vectors into the amounts of their basis vectors and add them all up. I’ll do this in long hand notation for clarity:

(5)

The same methodology applies if you want to subtract a vector from another, just instead of adding the components together we take one set away from the other:

(6)

You should note here that the resulting vector has a negative contribution of ![]() . It’s also worth nothing that the

. It’s also worth nothing that the ![]() is missing. This is because the resulting vector contains of

is missing. This is because the resulting vector contains of ![]() , so there’s no need to write that term down in this case.

, so there’s no need to write that term down in this case.

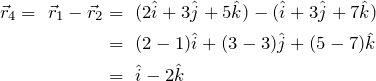

The Magnitude of a Vector

It’s at this point convenient to introduce a new concept. When calculating things it’s often useful to work out the magnitude of something. With scalars this is simple as they are already the magnitude of the quantity they are intended to represent. However, there’s a small amount of calculation required to attain this information from a vector. This measure is called the magnitude, or Euclidean norm, of a vector. This calculation gives us the actual length of the vector and disregards the direction information. We can calculate it by adding up the square of all the components of a vector and then taking the square root, like so:

(7) ![]()

You’ll notice that the notation for the magnitude of a vector is the symbol for the vector with pipes placed around it ![]() . This convention is actually fairly universal, so it should be easy to spot if you look out for the pipes on worksheets and textbooks. Just as an example, I’ll calculate the norm of

. This convention is actually fairly universal, so it should be easy to spot if you look out for the pipes on worksheets and textbooks. Just as an example, I’ll calculate the norm of ![]() and

and ![]() :

:

(8)

Vector Multiplication

This is where vectors get interesting. When working with scalars there is a single way to multiply two numbers together. The resulting answer is simply the product of the two numbers. In vector algebra however there are two different ways to multiply vectors, the dot product and the cross product. The dot product, or scalar product, of two vectors produces a scalar and can be thought of as the amount of one vector which is projected onto the other. In general, this is defined as:

(9) ![]()

where ![]() and

and ![]() are two arbitrary vectors and

are two arbitrary vectors and ![]() is the angle between them if they are placed tail to tail. It’s worth noting at this point that vectors that are orthogonal and orthonormal, such as our basis vectors, will have a dot product of 0, as by very definition there will be no component of one projected onto the other. Using geometrical arguments we can see that in general the dot product of two vectors is also given by:

is the angle between them if they are placed tail to tail. It’s worth noting at this point that vectors that are orthogonal and orthonormal, such as our basis vectors, will have a dot product of 0, as by very definition there will be no component of one projected onto the other. Using geometrical arguments we can see that in general the dot product of two vectors is also given by:

(10) ![]()

where ![]() and

and ![]() are the

are the ![]() component of the

component of the ![]() and

and ![]() vectors respectively. The other way of calculating the product of two vectors is called cross product, or the vector product, so called because it produces a vector. If we once again place the two vectors end to end we produce a hyperplane with one lower dimension that the space. In this case we create a 2D plane with the two vectors. The cross product produces a vector that is normal to this 2D plane, and hence orthogonal to both vectors original vectors. The cross product is defined as:

vectors respectively. The other way of calculating the product of two vectors is called cross product, or the vector product, so called because it produces a vector. If we once again place the two vectors end to end we produce a hyperplane with one lower dimension that the space. In this case we create a 2D plane with the two vectors. The cross product produces a vector that is normal to this 2D plane, and hence orthogonal to both vectors original vectors. The cross product is defined as:

(11) ![]()

where ![]() and

and ![]() are the vectors to be operated on,

are the vectors to be operated on, ![]() is the angle between them and

is the angle between them and ![]() is the unit vector that is normal to

is the unit vector that is normal to ![]() and

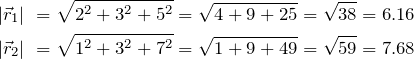

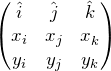

and ![]() . Working all of these out can be quite time consuming, but if you’re comfortable with some basic matrix manipulation there’s a much easier way to work this out. Let’s adopt a

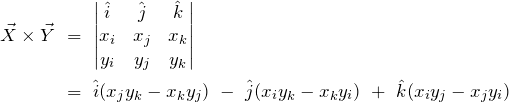

. Working all of these out can be quite time consuming, but if you’re comfortable with some basic matrix manipulation there’s a much easier way to work this out. Let’s adopt a ![]() matrix and populate it with the basis vectors on the first row, the corresponding components of the first vector on the second row and the corresponding components of the second vector on the first row. It should look a little something like this:

matrix and populate it with the basis vectors on the first row, the corresponding components of the first vector on the second row and the corresponding components of the second vector on the first row. It should look a little something like this:

(12)

Now that we’ve constructed this matrix, if we take the determinant of this matrix we find that it produces a vector, which just happens to be the cross product. We can do this like so:

(13)

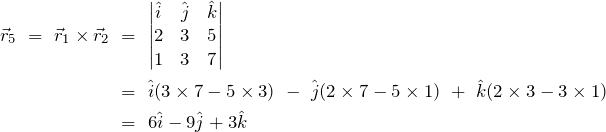

Once again as an example I shall find the cross product of ![]() and

and ![]() :

:

(14)

We stated before that any vector that results from the cross product of two vectors should itself be orthogonal to the other two vectors. We can verify this by taking the dot product of this derived vector ![]() with

with ![]() and

and ![]() . If the vectors are orthogonal, the dot products should both come back as zero:

. If the vectors are orthogonal, the dot products should both come back as zero:

(15) ![]()

Scalar and Vector Triple Product

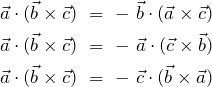

There are also multiple ways of multiplying together three vectors. Since the dot product of two vectors results in a scalar, any further products involving this result is simply falls under normal rules for multiplication. However, the result of the cross product of two vectors results in another vector, so we can take this further. What about if we take the dot product of this with a third vector? This is known as the scalar triple product and is expressed as [?]:

(16) ![]()

You can think of the result of this, ignoring the sign resulting from the initial vectors, as being the volume of a parallelepiped formed by the 3 originating vectors. If, like me, you’d never heard of this particular shape when you started I’ve included a link to the Wikipedia article. There are some interesting properties of this result that you may well need to know for a maths module. Firstly, the whole equation is completely invariant under circular shift. This just means that if we shift all the operands left or right in the equation we still get exactly the same answer [?]:

(17) ![]()

Another property worth noting is that swapping around any two of the operands results in negating the triple product; changing the sign:

(18)

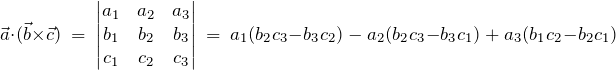

One final property that will come in useful later is that you can calculate the triple product by taking the determinant of a matrix containing those three vectors [?]:

(19)

What about if we want to take the cross product of the cross product? This is called the vector triple product, and it’s defined as:

(20) ![]()

By this definition, this useful relationship also holds [?]:

(21) ![]()

As we said before, the cross product is an anti-commutative operation. This means that you cannot change the two operands around and get the same answer, as we can with normal multiplication. We can use this to our advantage to define equivalent expressions, such as:

(22) ![]()

Vector Calculus

Up until this point we’ve studied vectors and how they’re exceedingly useful in a variety of applications in both mathematics and physics. However, in physics especially, studying steady state problems are particularly dull and are generally considered ‘trivial solutions’ to the governing equations of a system. We’re a lot more interested in dynamical systems that vary in time. For scalars we can study dynamical problems using calculus. The same is true when studying vectors, where we can use vector calculus. These operators are used when operating mathematical constructs called fields. During a degree you’ll most likely come across two different types of field, scalar fields and vector fields. A scalar field is a method of representing a given space by placing a number at every point a given space which represents a quantity. They can represent anything from density to electric fields. A vector field is very similar, but instead of placing a scalar at every point, you replace it with a vector. This is useful in disciplines such as fluid mechanics for representing the flow of a fluid. I’ll just go over the operators you can use

Gradient

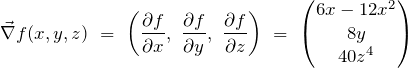

The gradient or grad operator, signified as a nabla symbol ![]() , allows the calculation of the gradient of a field. Just as the derivative calculates the gradient of a scalar function along one degree of freedom, the grad operator calculates the the gradient of every degree of freedom for a given field at the same time and returns the result as a vector. The grad operator for 3D euclidean space is defined as:

, allows the calculation of the gradient of a field. Just as the derivative calculates the gradient of a scalar function along one degree of freedom, the grad operator calculates the the gradient of every degree of freedom for a given field at the same time and returns the result as a vector. The grad operator for 3D euclidean space is defined as:

(23) ![]()

As an example, let’s say that we have a function ![]() . Now let’s say that we apply the grad operator to this function:

. Now let’s say that we apply the grad operator to this function:

(24)

Divergence

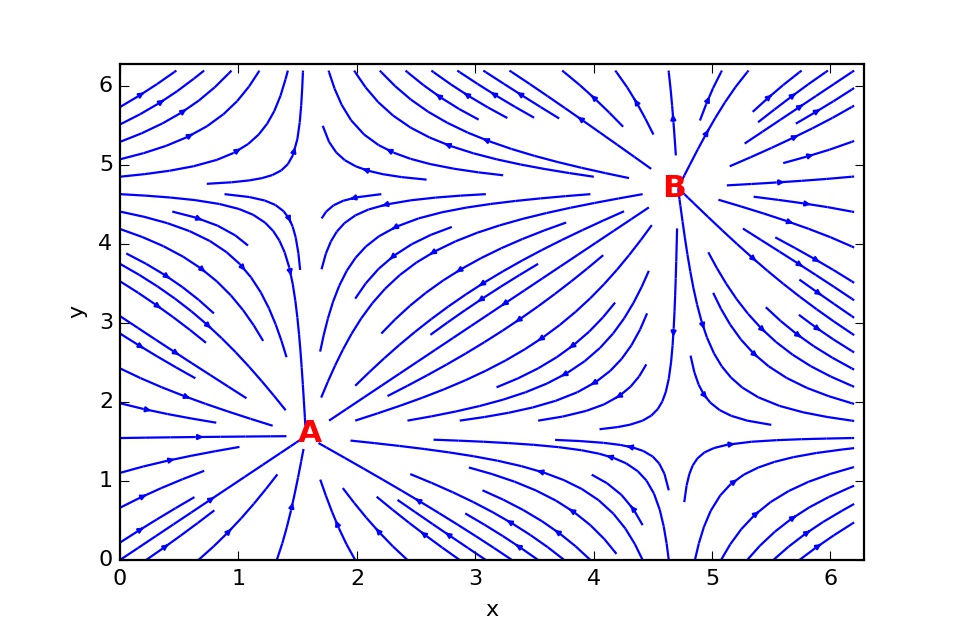

The divergence or div operator is the result of taking the scalar product of the grad operator ![]() with a vector field

with a vector field ![]() , meaning that

, meaning that ![]() . Physically, this can be thought of as the flow of the field into or out of an arbitrary point in the field. The number that comes out is a direct indicator of the flux flowing out of or in to the point. The sign of the resulting number also indicates the direction of the flow, with a negative number indicating a divergent flow where the field is flowing out of a point and a positive number indicating convergent flow where the field is flowing towards a point. This can also be described as a source or a sink respectively. For 3D Euclidean space, div is defined as:

. Physically, this can be thought of as the flow of the field into or out of an arbitrary point in the field. The number that comes out is a direct indicator of the flux flowing out of or in to the point. The sign of the resulting number also indicates the direction of the flow, with a negative number indicating a divergent flow where the field is flowing out of a point and a positive number indicating convergent flow where the field is flowing towards a point. This can also be described as a source or a sink respectively. For 3D Euclidean space, div is defined as:

(25) ![]()

So, if we adopt the result of the previous example such that ![]() . If we now attempt to take the div of

. If we now attempt to take the div of ![]() , we get:

, we get:

(26) ![]()

We can now substitute in coordinates for this field to find out if the field is divergent at a given point. Let’s just for the sake of argument use ![]() and

and ![]() . This yields a value of

. This yields a value of ![]() . This indicates that at the coordinates we specified, the field is strongly convergent and the point is acting as an effective sink.

. This indicates that at the coordinates we specified, the field is strongly convergent and the point is acting as an effective sink.

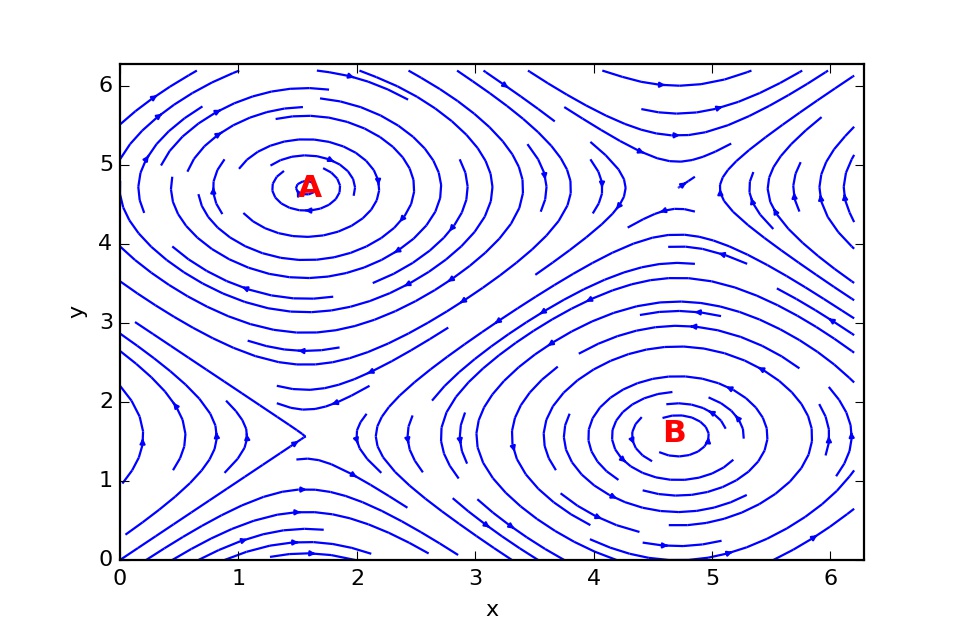

This figure shows a vector field with two points annotated. A shows a point of high convergence, where the arrows are flowing towards a source. B shows a point of high divergence, where the arrows are flowing away from a source.

Curl

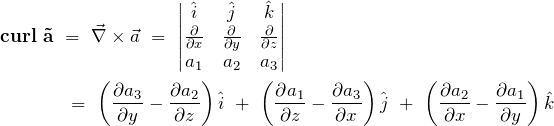

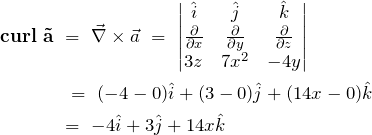

The final operator that I’m going to discuss in this post is the curl operator. This operator measures the infinitesimal rotation of a field at a given point in a vector field. This is essentially a measure of vorticity, the rotation of a flow. The curl of a field is defined as the cross product of the field with the grad operator, defined like so:

(27) ![]()

As previously we can use the determinant of a special crafted matrix to calculate the curl of a vector field ![]() , like so:

, like so:

(28)

Finally, let’s pluck a vector field out of the air and calculate its curl. ![]() . If we now run this through the mathematics…

. If we now run this through the mathematics…

(29)

This figure shows another vector field. Points A and B show points of high vorticity, hence a large curl.

Applications

This concludes our whistle stop tour of vectors in physics. It’s at this point you’re probably wondering where you might be able to apply this knowledge of vectors. I’ve discussed the pure mathematics of it, but where can they be applied in physics problems? I’ll just go through some of them now.

Classical Mechanics

Classical mechanics is a discipline in physics that nearly relies entirely on vectors. This is why it’s often referred to as vector mechanics. It should come as no surprise that displacements of objects in physics problems are very easily represented using vectors. Not only this but vectors are ideally suited to representing the velocity and acceleration of an object. Another major use of vectors is the representation of forces in a classical system. Vectors are invaluable because they can not only represent the direction in which the force acts, but they can also represent the strength of the force. Many problems that you’ll be faced with in this topic will ask you to resolve forces. This means that you add all the forces being applied to an object together to come up with a net resultant force [?]. Once you’ve calculated this and, assuming that the force is constant, it’s a trivial matter to calculate the acceleration, velocity and position of an object over time.

Electromagnetism

When dealing with electromagnetic forces it’s often easy to lose sight of the fact that you’re actually dealing with the interaction of objects with the underlying electric and magnetic fields. Electric fields are easily represented as scalar fields. Using this representation we can perform all the operations mentioned above. Some of these operators are also used in the formulation of Maxwell’s equations, the fundamental equations of electromagnetism that have the power to describe not only the initial state and dynamical evolution of a given electromagnetic system, but they’re the root of some important proofs. One of the most iconic is the description of light as a coupled self-propagating electric and magnetic field. You can also perform integration within the framework of vector calculus. This is involved in the formulation and manipulation of Gauss’ equation. This equation allows the calculation of the electric field around a charge by performing a surface integral over a Gaussian surface surrounding the charge. Also using vector calculus you can calculate the magnetic field induced by a flowing current using a line integral. It’s probably fair to say that electromagnetism is probably the largest use of vector calculus that you will come across doing an undergraduate degree.

Fluid Mechanics

I’ve written extensively about fluid mechanics before in this post, this post and this post so I won’t bore you with the specifics, but just as in electromagnetism, fluid mechanics makes extensive use of vectors. The flow of a fluid is expressed as a vector field of velocities and the density of a fluid at each point can be expressed as a scalar field. Using these fields we can evolve a system using the governing equations of a given system. One of these is the momentum equation, known as the Navier-Stokes equation. This equation isn’t easily solvable, so studying the characteristics of the fluid using our operators can allow us to simplify it. The divergence operator can be used to see if the system we’re studying is an effective sink or source, allowing the characterisation of sink or source terms in our equations. The curl operator is a good measure of a system’s vorticity, allowing us to conveniently analyse flow characteristics; whether the system is in a laminar (slow, straight) regime, or a turbulent (fast, high vorticity) regime. With these fluid characteristics in mind, we can make meaningful simplifications to equations that make them easier to solve while retaining the physically significant terms.

Quantum Mechanics

At the moment you may find tackling the mathematical rigour of quantum mechanics a little daunting, and that’s because it is. It’s very difficult to represent the often obfuscated physics in an immediately obvious way. Vectors can actually help with this task. Quantum mechanics makes use of operators in order to attain physical quantities. Among them is the energy of a quantum system, provided by the Hamiltonian operator. This can be calculated using Schrödinger’s equation:

(30) ![]()

This is the time independent version of this equation. You can see that it makes use of the grad operator to attain its results. This important equation allows the calculation of the energies of all possible eigenstates of a particle. In some notations of quantum mechanics states of a particle can be represented as a vector. This vector contains probability amplitudes that express the superposition of states that constitute a given quantum system. This is true of various quantum operators such as energy and momentum. This representation holds true for any solvable quantum system, such as the infinite and quantum square well, known as the particle in a box, and the quantum harmonic oscillator. Although this is a less common use of vectors, its still worth noting.

Conclusion

That more or less sums up the basics you’ll probably need to know to get off to a good start with vectors at degree level. It’s worth stressing at this point that this post has focussed purely on 3D euclidean space. All of these operations are however completely applicable to any set of bases vectors. Probably the two most useful are cylindrical coordinates and spherical polar coordinates. Cylindrical coordinates adopt the ![]() ,

, ![]() and

and ![]() coordinates, which represent angle around a cylinder, radius outward from the origin and height from the origin respectively. Spherical polars adopt the

coordinates, which represent angle around a cylinder, radius outward from the origin and height from the origin respectively. Spherical polars adopt the ![]() ,

, ![]() and

and ![]() coordinates, which represent the radius outward from the origin, the angle around in the x-y plane between 0 and

coordinates, which represent the radius outward from the origin, the angle around in the x-y plane between 0 and ![]() and elevation angle between

and elevation angle between ![]() and

and ![]() . There are plenty of references out there and you can find plenty of examples out there of their use. As always I hope this post has been useful and if you notice anything amiss please do let me know and I’ll correct it ASAP. Until time, thanks for reading.

. There are plenty of references out there and you can find plenty of examples out there of their use. As always I hope this post has been useful and if you notice anything amiss please do let me know and I’ll correct it ASAP. Until time, thanks for reading.